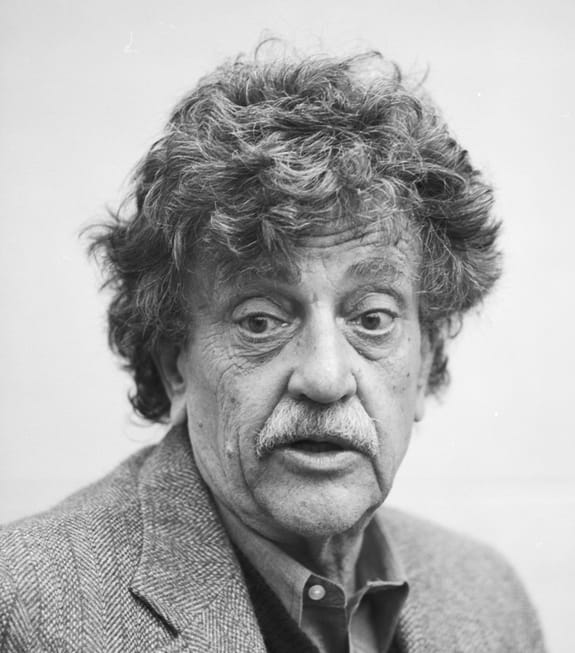

In Kurt Vonnegut’s dystopian novel, ‘Harrison Bergeron’, the world is regulated through handicaps designed to make everyone equal. The eponymous protagonist, a tall, powerfully built young man, is burdened by 300-pound weights, while attractive people are forced to wear grotesque masks.

Harrison’s father George, a man of above-average intelligence, has a ‘handicap radio’ attached to him, which emits an unpleasant sound every time it deems that he is thinking too much.

Vonnegut’s egalitarian dystopia sprung to mind when I was watching (virtually) the recent SXSW festival that takes place every year in Austin, Texas. Technology – and the ethics of technology – was a central theme of many of the talks, and I was particularly interested in some of the conversations around neural prosthetics (or neuroprosthetics).

Neural prostheses are described by Wikipedia as “a series of devices that can substitute a motor, sensory or cognitive modality that might have been damaged as a result of an injury or a disease.”

A good example is cochlear implants. According to Wikipedia, these devices substitute the functions performed by the eardrum and stapes, while they simulating the frequency analysis performed in the cochlea.

“A microphone on an external unit gathers the sound and processes it; the processed signal is then transferred to an implanted unit that stimulates the auditory nerve through a microelectrode array. Through the replacement or augmentation of damaged senses, these devices intend to improve the quality of life for those with disabilities,” the Wikipedia page explains.

“Kurt Vonnegut’s novel ‘Harrison Bergeron’ pictured a world in which an equal society was achieved through a series of physical handicaps”

The ethics of technology

Neural prostheses have been around for decades, but more recent technologies, such as the internet-of-things, have given the concept a new range of possibilities. It has also raised a new series of questions. If the technology can be used to help those with brain injuries, what other conditions could it be used for? Presumably is could be used for common conditions such as attention deficit disorders, but where does one draw the line? And at what point do these devices change us from who we really are?

Furthermore, what if these implants are used as a means of control or exploitation? Could they act like George Bergeron’s radio, prompting us to not think about certain things, or worse, to manipulate us into thinking a certain way or to respond to external stimuli in predetermined ways?

Many (and not just the cynics) will point out that such manipulative devices already exist, in the smartphones and tablets we use every day. They track our movements, monitor the posts we like on social media and feed us more of the things we like, in ways that can be monetised by the social media company.

They do this in simple ways but also in ways that are complex and arguably invasive. Dwell a while on a video about how an emaciated and traumatised stray dog finds a loving home and is now happy and well-fed, and the social media platform can draw conclusions about what your emotional triggers are and use this to feed advertisements to you, at times when you are most likely to purchase.

Using our emotions and aspirations to encourage us to make purchases is nothing new. Aficionados of the television series ‘Mad Men’ will know that these techniques were around even before the 1960s, when the series was set. Tobacco companies famously used attractive models, sports stars and even medical doctors to promote cigarettes for years before laws were introduced curtailing advertising. Household products are often associated with family love and wholesomeness in advertisements.

Tobacco companies famously used attractive models, sports stars and even medical doctors to promote cigarettes for years before laws were introduced curtailing advertising.

What sets the new social media apart is their ability to do all of this more accurately (the old saying – “I know 50% of advertising doesn’t work; I just don’t know which 50%” – no longer applies) and in ways where consumers no longer have the agency that they used to have.

Another problem occurs when consumers are only fed a supply of media that conforms to their world view or that push their views in a certain direction. When people are fed a diet of conspiracy theories and fake news on social media, this can have major damaging effects on society. Efforts to build support for vaccine programmes, for example, can be set back. Echo chambers are created that polarise people and foment populism. It can even lead to civic unrest, as we saw in the US in early January.

These effects have led to calls to regulate social media and other technology giants more vigorously. The companies themselves have responded to criticism with tighter checks on false news and even the banning of certain accounts. After the Capitol riot in January, Twitter and Facebook banned outgoing President Donald Trump while Amazon Web Services de-platformed Parler, the social media outlet favoured by many on the right in the US. Too little, too late, said some. An infringement on the right to free speech, said others.

Quis custodiet ipsos custodies?

Who will keep an eye on the guards themselves? The question posed by the ancient Roman poet Juvenal is every bit as relevant today. Under US law, the actions by Twitter, Facebook and Amazon were entirely legal, since all three are private businesses with their own terms and conditions to which their users must comply. But it is not ideal when private companies that are huge platforms in their own right can be the sole decision makers about what their users say. What about when the algorithm gets it wrong? How would we feel if we were the ones being de-platformed? And what assurance do we have that the social media companies will act in the public interest at all times?

In a similar way that privately owned stock exchanges have to comply with the securities laws of the countries they are based in, arguably the social media companies should be subject to laws and regulations about fake news, incitement and the unethical manipulation of users’ emotions for profit.

But this too is a difficult one to get right, since some degree of emotional manipulation is part and parcel of all marketing models, as noted above.

Perhaps of greater concern is the role of government. One may hope that established democracies will be able to manage the different conflicts of interest in an imperfect, but workable manner, but what about authoritarian regimes? We have seen the military junta in Myanmar impose a number of internet blackouts and shutdowns of social media sites to counteract popular protests. The Ugandan government also took down the internet in the build-up to recent elections.

We have seen the military junta in Myanmar impose a number of internet blackouts and shutdowns of social media sites to counteract popular protests.

China, the emerging global power, runs a system known as social credit, whereby individuals and businesses are monitored and evaluated for trustworthy behaviour. While this includes fraudulent financial behaviour, it can also include such infractions as making a restaurant booking and not showing up, jaywalking or eating on a train. A poor social credit score can result in the denial of certain services, such as the right to travel, admission to certain schools or jobs, or simply being shunned by friends on social media.

Technology, especially surveillance technology, enables the monitoring and scoring under a social credit system.

The system enjoys support among many Chinese people (a survey by the Free University of Berlin found "a surprisingly high degree of approval of social credit systems across respondent groups" while "more socially advantaged citizens (wealthier, better-educated and urban residents) show the strongest approval … along with older people"). Nonetheless, critics argue that it is not only an invasion of individuals’ privacy, but can also be used to target minorities or to stifle free speech. It is difficult to see a social credit system enjoying similar support in western democracies, even if it were to lead to better behaviour in traffic or less littering.

“Augmented and diminished reality technologies have the potential to transform our lives.”

Tackling inequality – or just making it go away

Another ethical concern about new technologies is that they could heighten inequality in society. In a world of Big Data, in which our everyday actions and behaviours have become a commodity for the data gatherers to monetise, we may end up losing much of our privacy – or all of it, as the Chinese social credit system implies. However, the very rich may be in a position to get the most out of the technology, while in effect buying their privacy.

The world of virtual reality already presents some ethical questions. Augmented and mixed reality will be powerful tools and will have all sorts of uses, from training to therapy (think of using mixed reality to help a person overcome arachnophobia, for example).

Diminished reality is a technology in the same field, whereby certain elements are removed from sight or hearing. This can be useful for surgeons, for example, by removing from sight the tissue covering the organ that the surgeon is working on. Diminished reality can also be used to remove background noises or unpleasant sensations.

But what if diminished reality is used to remove the sight of poverty, litter or urban degradation? Instead of encouraging society to deal with its problems, we may end up just removing them from view, at least from the view of those capable of doing something about it.

Occupy Silicon Valley

In late 2019, before we knew about Covid-19, Bank of America strategist Michael Hartnett gave his prognostication for themes that are likely to dominate this decade, often in direct contradiction with themes that dominated the last decade. One of these was a shift from ‘Occupy Wall Street’ to ‘Occupy Silicon Valley’, in other words, regulatory and public pressure will shift from financial firms to tech firms.

How this pressure will manifest and what changes will come about is hard to predict, given the complexities noted above. It’s also made difficult to predict because of the pace of change. Regulations may be good at preventing the current abuses, but may struggle to keep up as technology finds new frontiers.

Similarly, it will be hard to identify the tech winners and losers in all of this. Some will have their dominance curtailed, but others will have the scale to successfully adapt. New legislation and regulation may even end up having unforeseen consequences, such as making it harder for new entrants in the market or by putting too much of the power of surveillance in the hands of governments.

There are many aspects of ethics when it comes to technology, many of which we will be exploring in the coming months.

Further reading:

Neuroprosthetics, Wikipedia, https://en.wikipedia.org/wiki/Neuroprosthetics

Social credit systems, Wikipedia, https://en.wikipedia.org/wiki/Social_Credit_System

‘Parler: The Business Ethics Of Framing And De-Platforming’, Forbes Magazine, January 2021, https://www.forbes.com/sites/robertzafft/2021/01/18/parler-the-business-ethics-of-framing-and-de-platforming

‘A survey of diminished reality: Techniques for visually concealing, eliminating, and seeing through real objects’, academic paper by Shohei Mori and others, https://ipsjcva.springeropen.com/articles/10.1186/s41074-017-0028-1

About the author

Patrick Lawlor

Editor

Patrick writes and edits content for Investec Wealth & Investment, and Corporate and Institutional Banking, including editing the Daily View, Monthly View, and One Magazine - an online publication for Investec's Wealth clients. Patrick was a financial journalist for many years for publications such as Financial Mail, Finweek, and Business Report. He holds a BA and a PDM (Bus.Admin.) both from Wits University.

Receive Focus insights straight to your inbox